It’s been less than a year since Apple introduced Apple Intelligence, the company’s take on the artificial intelligence trend that started a few years ago.

While Apple Intelligence hasn’t been as big of a hit as Apple probably hoped, it already has some neat features you can use now, including a new technology called visual intelligence.

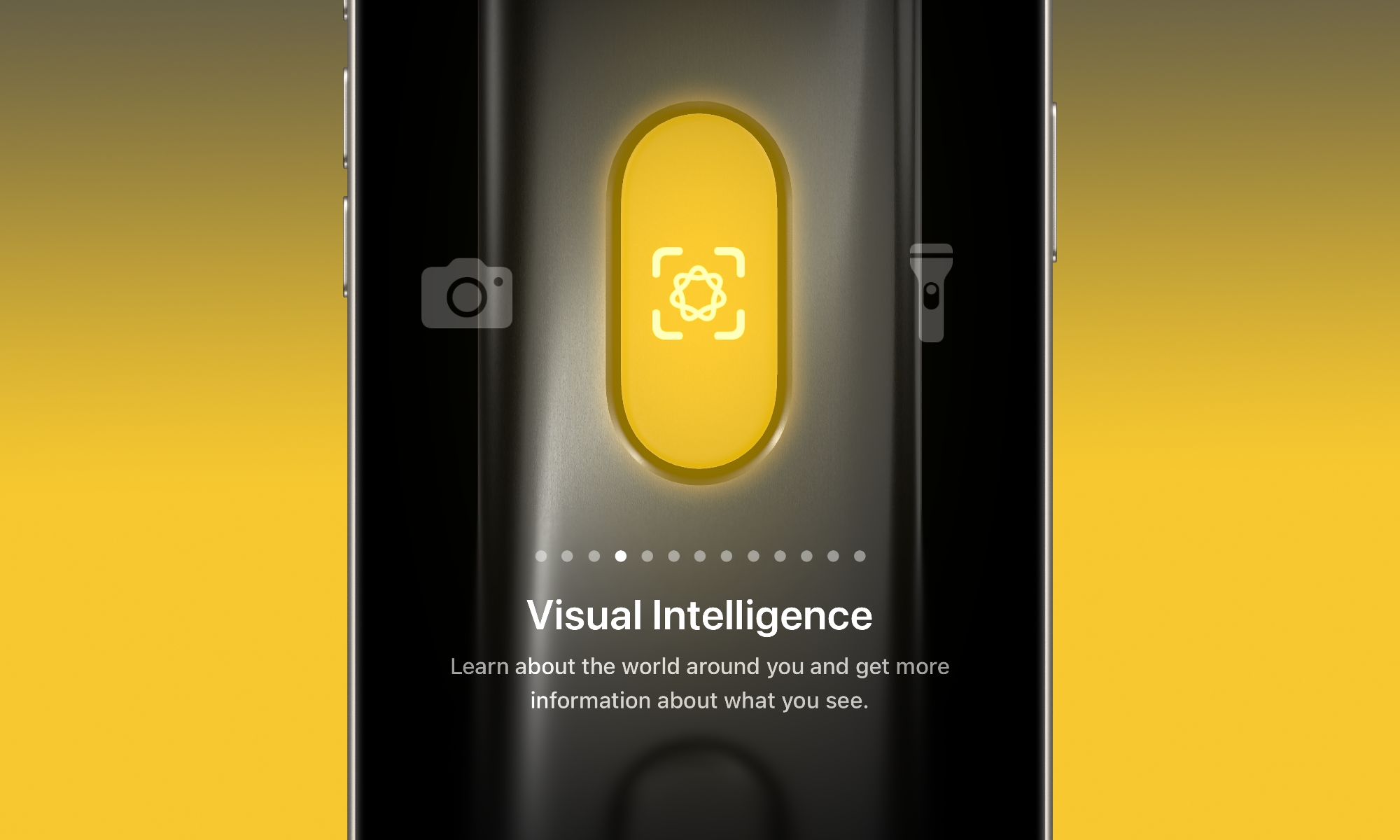

Apple surprised us with visual intelligence during the iPhone 16 event in September 2024, announcing it separately from its other AI features as a new way to use your camera to learn more about the world around you by using the new Camera Control button.

While this is a cool tool, not everyone might be familiar with it. Fortunately, that’s what we’re here for. Here’s everything you need to know about this new feature and how to use it on your iPhone 16 — or your iPhone 15 Pro when iOS 18.4 arrives next month.

What Is Visual Intelligence?

As we mentioned, visual intelligence is a new feature of Apple Intelligence. This technology is meant to help you learn more about the things around you.

This feature lets you point your iPhone’s camera at a real-world object and ask for more information about it. You can use the web to search for more information or get a more personalized answer from ChatGPT.

visual intelligence doesn’t use Siri to answer your questions, as it’s not yet smart enough for the job. Instead, you’ll have to use ChatGPT. Of course, that’s not a bad thing; ChatGPT is a powerful AI tool that will help you the best it can.

What Do You Need to Start Using Visual Intelligence?

You will need a few things to start with visual intelligence. Unfortunately, for users with older iPhones, this feature is only available on the iPhone 16 lineup. That includes the most affordable iPhone 16e, despite the lack of a Camera Control on that model; it uses the Action button instead. This means it’s also coming to the iPhone 15 Pro and iPhone 15 Pro Max, but not until iOS 18.4 launches next month. However, anything older than that won’t support visual intelligence as only the iPhone 15 Pro and later models support any Apple Intelligence features.

You also need to be in the right place. Unfortunately, Apple Intelligence and visual intelligence aren’t available in the European Union — yet. If you live in that region or if your Apple Account (Apple ID) is part of a country or region in the EU, you won’t be able to use this feature. However, that’s also expected to change when iOS 18.4 lands in April.

You also need to have the correct version of iOS. For the iPhone 16, iPhone 16 Plus, iPhone 16 Pro, and iPhone 16 Pro Max, that’s iOS 18.2 or later. The iPhone 16e requires iOS 18.3, but that’s the minimum version available for that newer model. The iPhone 15 Pro and iPhone 15 Pro Max require iOS 18.4, which is currently in public beta and should be released in April.

You can check which iOS version you have installed on your iPhone by going to Settings > General > Software Update. If there’s a software update available, be sure to install it.

Lastly, you need to have Apple Intelligence enabled on your iPhone to use visual intelligence. Chances are you already have it on, but you can double-check by going to Settings > Apple Intelligence & Siri and then turning on Apple Intelligence.

Once you have all that covered, you can use visual intelligence on your iPhone.

How to Use Visual Intelligence on Your iPhone 16 and iPhone 16e

Getting started with visual intelligence is very easy. To begin using visual intelligence on your iPhone 16, iPhone 16 Plus, iPhone 16 Pro, and iPhone 16 Pro Max, press and hold the Camera Control on the bottom right side.

Since the iPhone 16e doesn’t have a Camera Control, users of that model have several other options for triggering visual intelligence. First, you can set up your Action Button to open visual intelligence. All you need to do is go to Settings > Action Button and swipe to the left until you find the visual intelligence option.

Additionally, you can add a control to your iPhone’s Lock Screen. To change your Lock Screen controls, you’ll need to go to Settings > Wallpaper and then tap on your Lock Screen. Next, tap the minus button on top of one of the controls at the bottom of your screen. Then, tap the plus button and select the visual intelligence control. Tap on Done in the top right corner of your screen when you finish.

Lastly, you can add a visual intelligence control to your iPhone 16e’s Control Center. Open the Control Center by swiping down from the top right corner of your screen and then tap on the plus icon in the top left corner of your screen. Search for the visual intelligence control and add it to your Control Center.

The Action button, Lock Screen, and Control Center options are exclusive to the iPhone 16e right now. They’ll be coming to all iPhones that support Apple Intelligence in iOS 18.4, letting iPhone 15 Pro and iPhone 15 Pro Max users access visual intelligence and providing other ways for iPhone 16 users to trigger it if they’d prefer not to use the Camera Control.

Once you trigger visual intelligence, you can point your camera to any object you want and try to get more information.

What Can Visual Intelligence Do?

As of this writing, Apple claims that you can use visual intelligence for four different things.

Learn More About a Business

You can use visual intelligence to get more information about a business. For instance, you can get information about the businesses’ hours of operation, price ranges, contact information, and even the ability to check reviews or make reservations. Unfortunately, this feature is currently limited to the US, but it will hopefully be available in other regions soon.

Identify Plants and Animals in a Flash

Visual intelligence can also help you identify and get more information about animals and plants. After you open visual intelligence, you can point to a plant or animal; without asking for it, you’ll get its name near the top of your screen. You can tap that name to get more information about the plant or animal you point to.

Use Visual Intelligence to Interact With Text

You can also use visual intelligence to interact with text in many different ways. All you need to do is open visual intelligence and point your camera to any text you want. Next, take a photo by tapping the circle at the bottom of your screen or by pressing the Camera Control.

Once you take the picture, your iPhone will automatically analyze the text. You can ask it to do things like read the text out loud, create a summary of the text, translate it, make a call if the text is a phone number, visit a website if the text is a URL, send an email if the text is an email address, or create a calendar event if the text contains date and time information.

Use ChatGPT or Google With Visual Intelligence

Last, you can use visual intelligence to get more information from ChatGPT or Google. When you take a picture with visual intelligence, you’ll notice a small speech button near the bottom of your screen. When you tap it, you can ask ChatGPT what you’re looking at.

For instance, you can ask it to give you more information about an object or try to identify something for you. Keep in mind that it doesn’t work as well as it should; when we tried it, it kept telling us that a MacBook was an Intel computer.

Also, remember that you’ll need to have ChatGPT turned on on your iPhone to use this option. You can turn it on by going to Settings > Apple Intelligence & Siri > ChatGPT and then enabling the ChatGPT extension.

Of course, when ChatGPT fails, you’ll also be able to tap the search button that appears near the bottom to get results from Google. You’ll see images similar to the item you’re looking at.

Make the Most Out of Visual Intelligence

As you can see, visual intelligence is a really powerful tool that can help you in many different ways. Sure, both Apple Intelligence and visual intelligence are far from perfect, but they’re already capable of many things that can make your everyday life just a little bit easier.

Since it’s free and pretty straightforward to use, there’s no reason you shouldn’t give it a try — as long as you have the right iPhone, that is.

global $wp;

. ‘/’;

?>